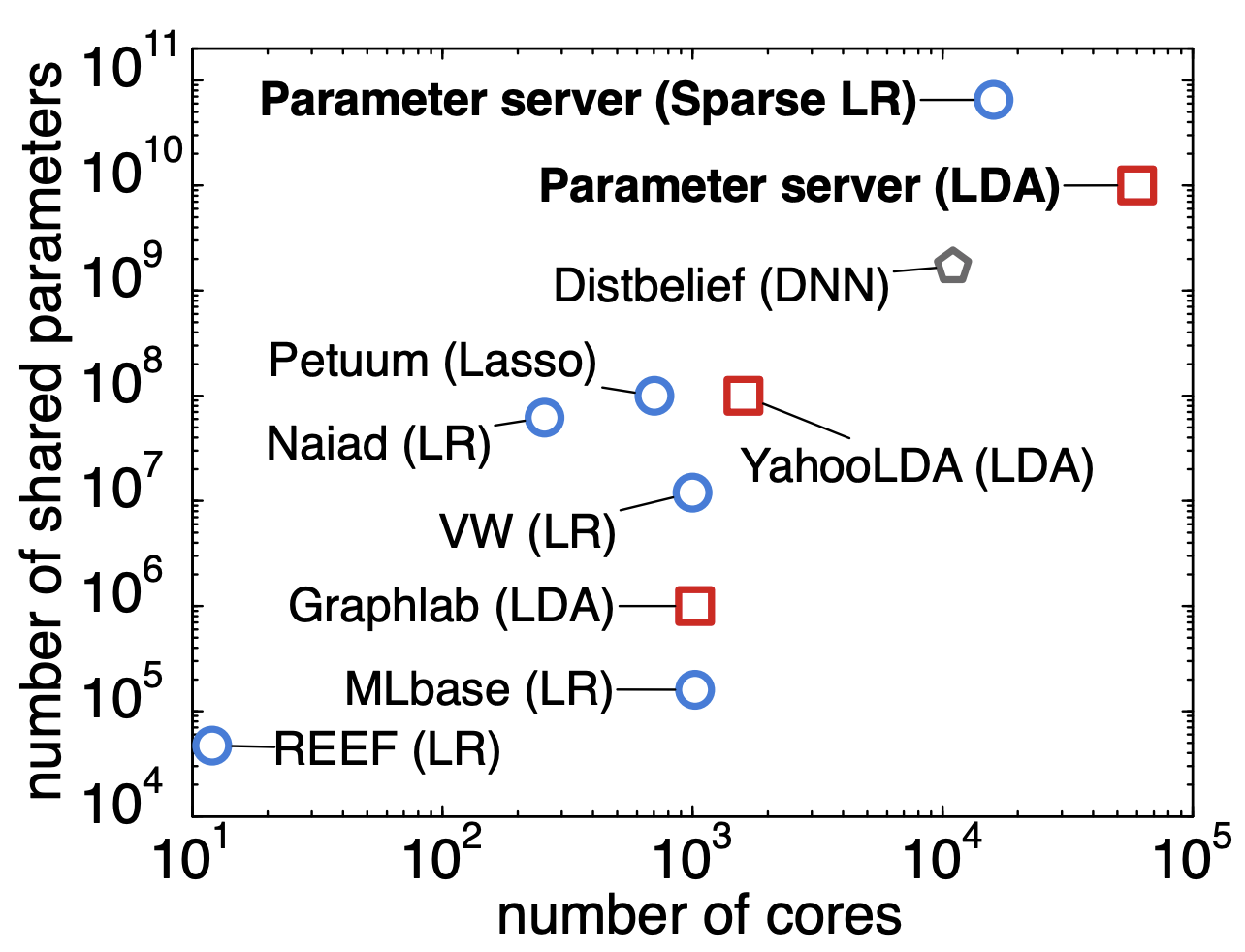

100 Terabytes, 5 Billion Documents, 10 Billion Parameters, 1 Billion Inserts/s

parameter server

distributed learning

We’ve been busy building the next generation of a Parameter Server and it’s finally ready. Check out the OSDI 2014 paper by Li et al.; It’s quite different from our previous designs, the main improvements being fault tolerance and self repair, a much improved network protocol…

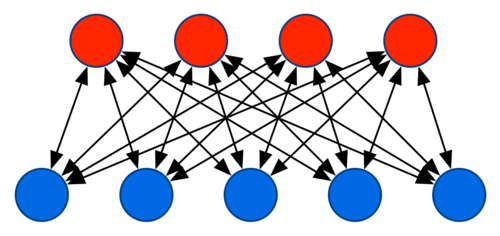

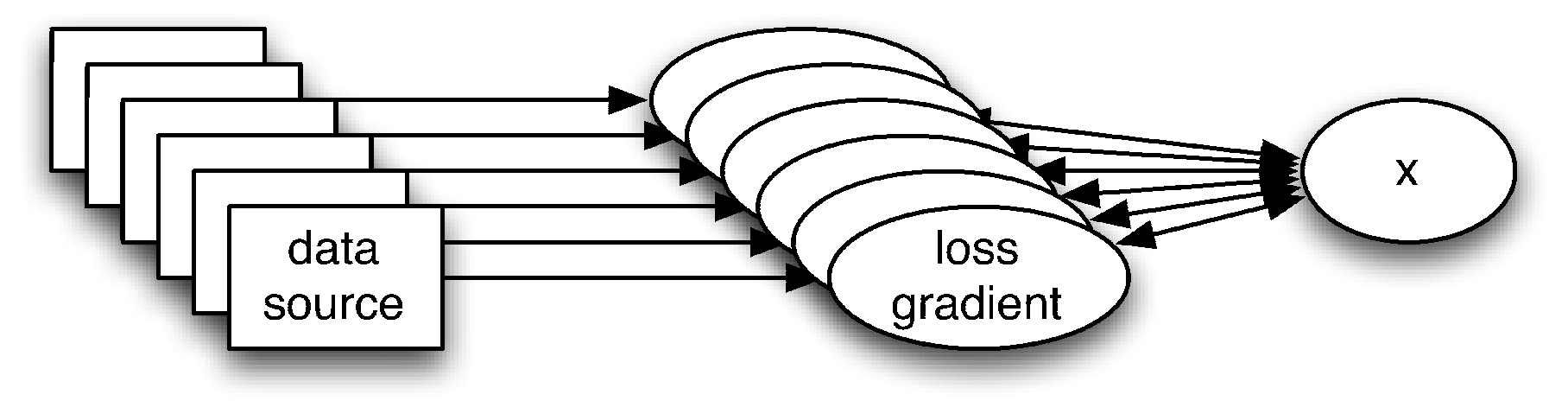

Distributed synchronization with the distributed star

distributed synchronization

hashing

Here’s a simple synchronization paradigm between many computers that scales with the number of machines involved and which essentially keeps cost at \(O(1)\) per machine. For lack of a better name I’m going to call it the distributed star since this is what the communication looks like. It’s quite similar to how memcached stores…

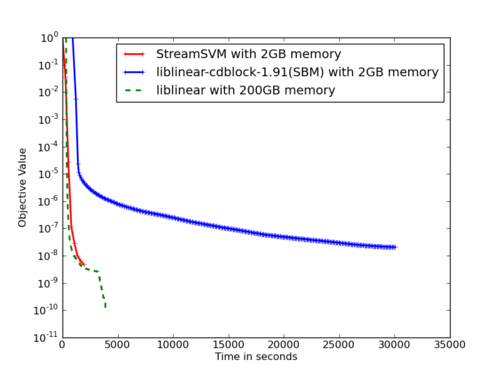

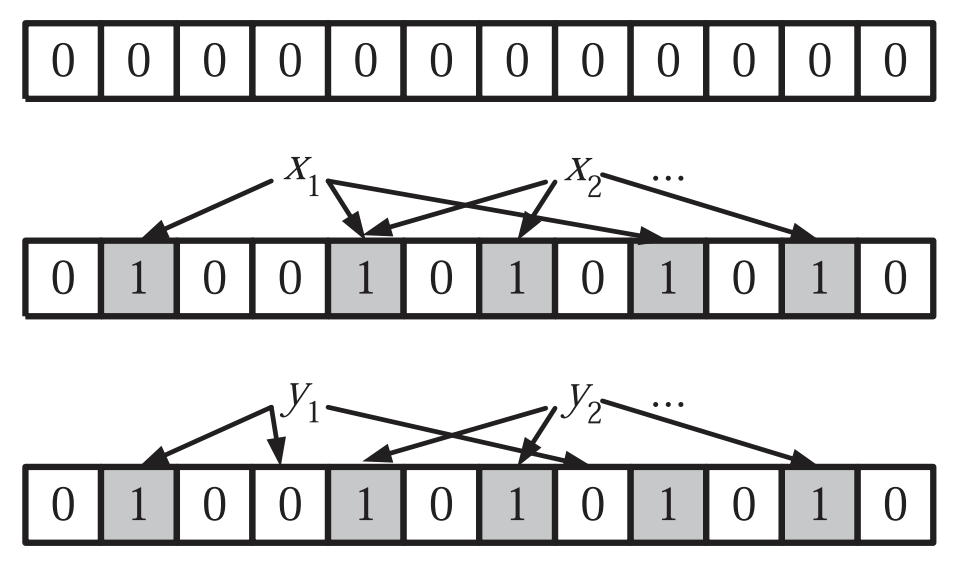

Bloom Filters

Bloom filter

hashing

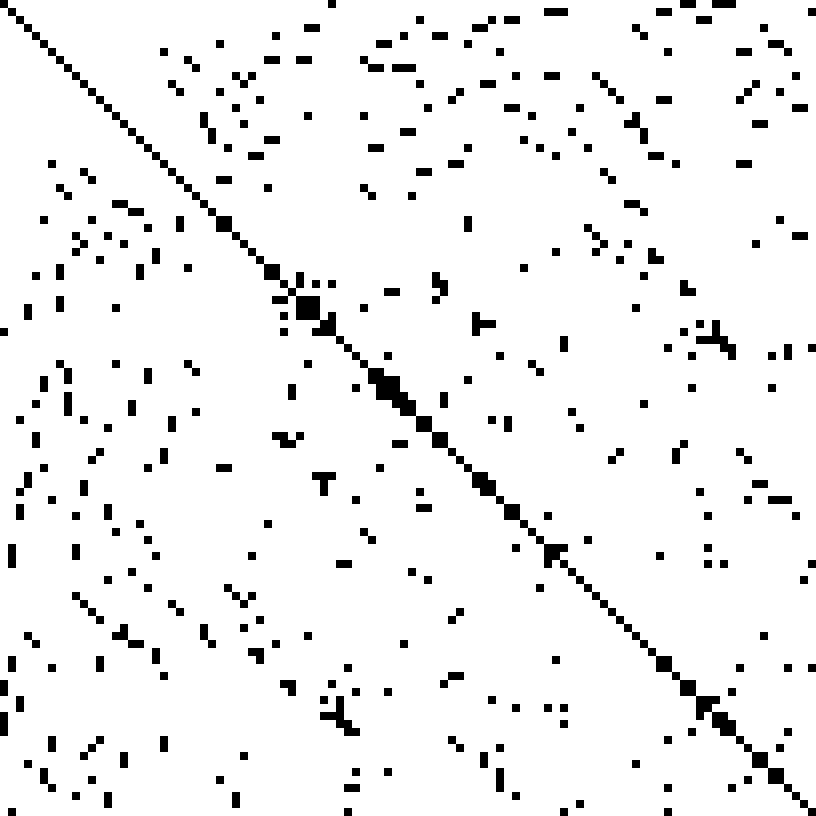

Bloom filters are one of the really ingenious and simple building blocks for randomized data structures. A great summary is the paper by Broder and Mitzenmacher, 2005. The figure above is from their paper. In this post I will briefly review its key ideas since it forms the basis of the Count-Min…

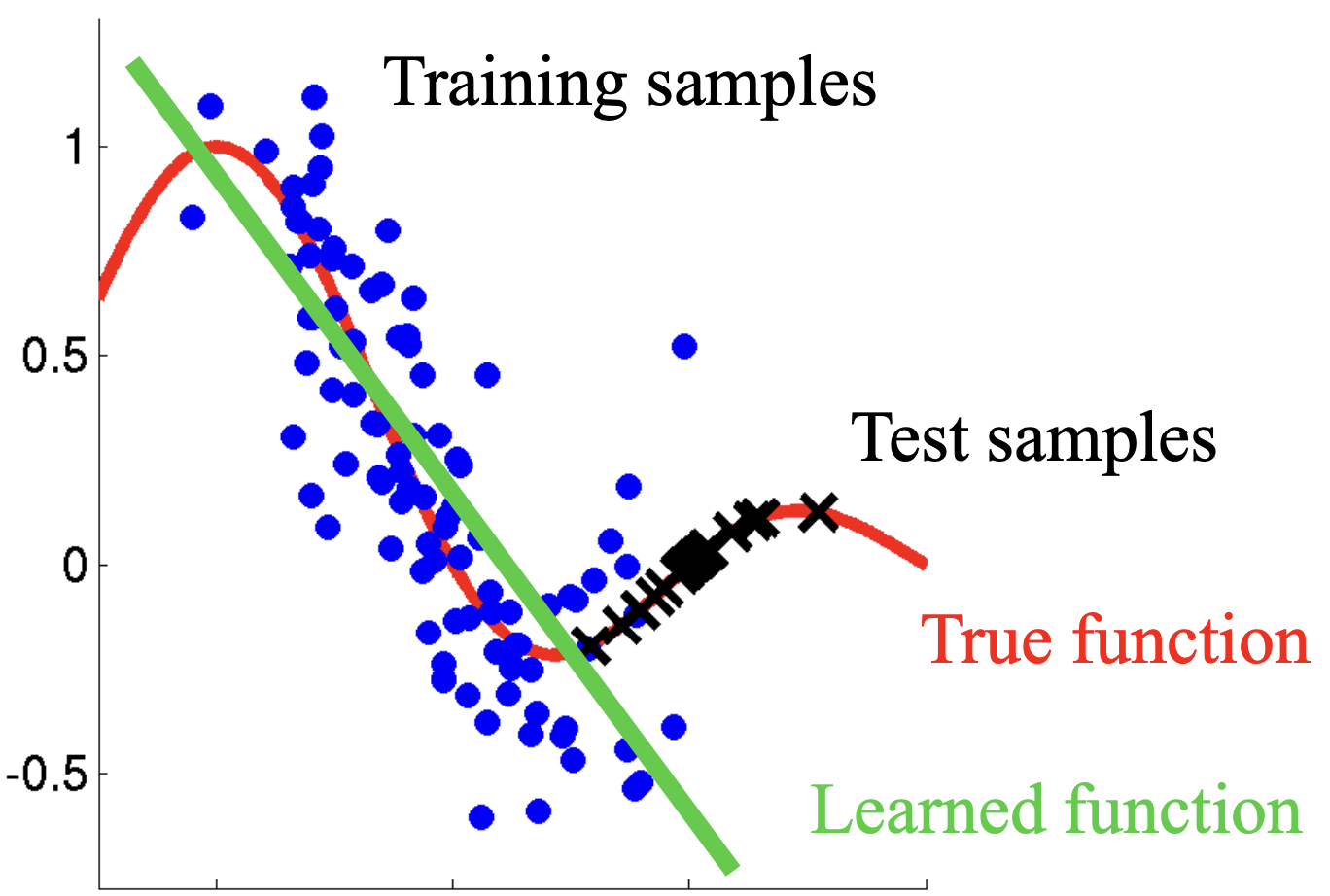

Collaborative Filtering considered harmful

collaborative filtering

search

Much excellent work has been published on…

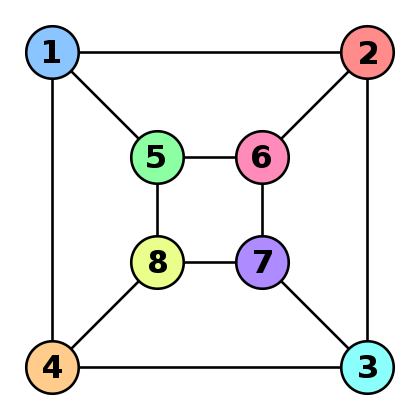

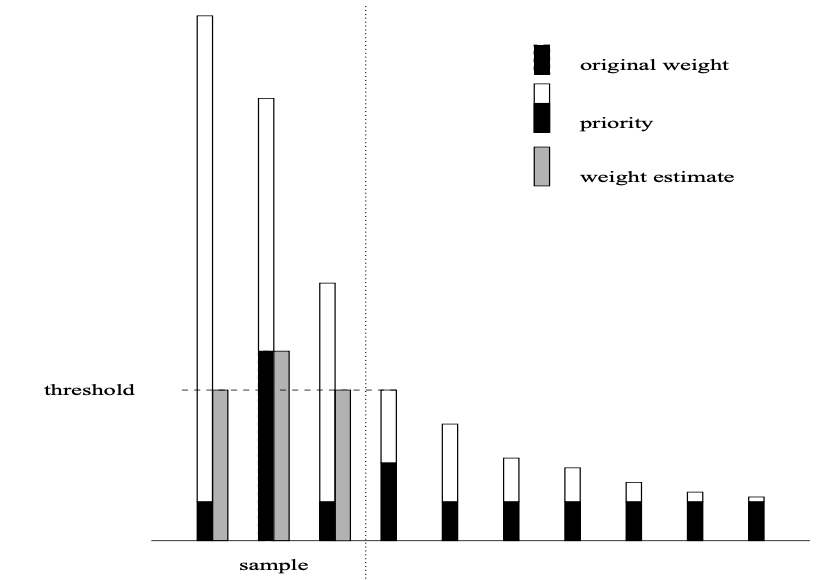

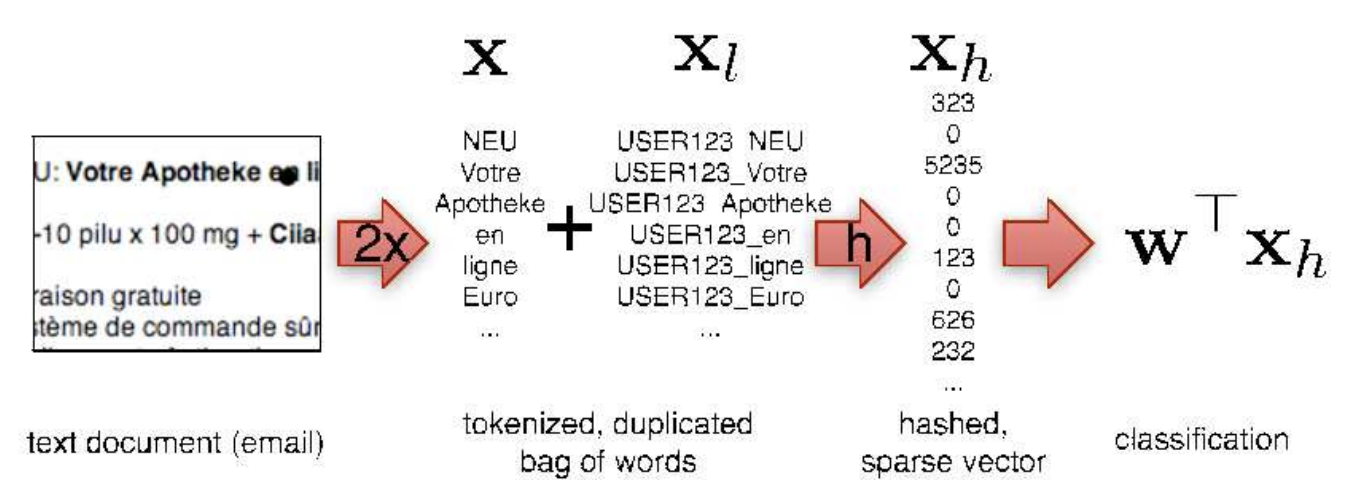

Hashing for Collaborative Filtering

hashing

collaborative filtering

This is a follow-up on the hashing for linear functions post. It’s based on the HashCoFi paper that Markus Weimer, Alexandros Karatzoglou and I wrote for AISTATS’10. It deals with the issue of running out of memory when you want to use collaborative filtering for…

No matching items